Foundations of Future AI

by Tom Veatch

The aim of AI is (understanding the design of a device with) traction in sucking chaos into order. A device like a program or a robot, or a human being.

Here's some things AI could maybe use. Integrated spatial perception and proprioception. Redundancy in bit representation. Light vs Recognition as aspects of Consciousness. Synthetic or integrated perception. A proper emotional/motivational system.

Internal Representation of Spatial Experience

A puzzle: How is space represented in neuron-equipped creatures, and how might it be in robots?Worm Consciousness and the Home Depot Flash

In about 2003, I bought a house. There was a big basement, but it was cold in the winter and I wanted to play pingpong down there, so I thought I'd insulate the walls. One day I trundled off to Home Depot to buy maybe eight or a dozen 4' x 8' x 2" sheets of styrofoam insulation. I found some with a sheet of aluminum foil on one side, maybe to reflect away some infrared heat or something, which seems a bit useless inside a wall, I thought, but, hey, it was all they had, so I started to pull out sheets of this stuff and slide them on top of one another on the cart. Pull, stack, slide, pull, stack, slide. After maybe six of them were on there, I happened to touch the aluminum part of two different sheets with thumb and forefinger, and I saw something amazing to me, like a small miracle, a little blue spark of lightning hit my thumb where it was about to touch the other sheet. Wow! I thought, Cool!

What I didn't realize is that I must have charged up the sheet like a giant capacitor, in fact 4x8=32 square feet of capacitor and every time I slid one across the other it was similar turning a big electric motor, charging it up by movement of a conductor. So the lightning was the discharge of that stored voltage across the gap right through hand. Okay the next thing I did was really stupid. (Can you guess?!)

Because I thought this was the coolest thing, I thought let me try it more carefully, with both hands, so one finger on one sheet, and a finger on the other hand on another. Snap! Later I realized that was a mistake. But the moment of, wow, let me explain. You know in the cartoons there's the cat in the lightning storm, and it gets zapped, and everything turns black except you see the skeleton all in white. Well it was just like that, everything suddenly went black, but instead of seeing my skeleton all in white, I saw this thin wiggly rope, or like a string with bends and curves in it, all in white, going across from forefinger up the arm, across the chest, then with something like a knotted bit, with a string hanging off it more or less perpendicularly for an inch or two, in the general area of the left middle of my chest, and then squiggling down the other arm to my opposite forefinger. I had this detailed, three-dimensional, spatial, instantaneous vision of this white string like thing.

So of course next thing I did was I walked around the corner and sat down. I hadn't quite electrocuted myself to death, but definitely I needed a little rest, so I sat for several minutes, and a Home Depot person actually came by and asked if I was okay, I said yes, and a few minutes later I got up and I just went home. I could get my stack of insulation another day. Sorry guys, I left you a mess!

So that was what happened, and I think there's actually a lot to draw from this experience.

The practical lesson, of course, is, Don't Do What I Did. It only takes 5 milli-amps to stop the heart, as one learns in plumbing school, so even if this was not very many amps it was a lot of volts, to be sparking across an air gap, so it would have been no surprise if it were dangerous or even fatal. Home Depot, take note.

So I never did that again, learned that lesson.

But there's more to it than just that.

Explaining the Flash

I think about this from the perspective of a cognitive scientist, a computational psychologist trying to understand what must the world be like for something like that to be able to happen. And I don't mean electrons and capacitors and voltage flows, I mean the nervous system of humans and even other animals.You might not think it's a mystery, but hold on a second. What happened was a clear three dimensional spatial perception of an interior electrical pathway. I remember thinking about this, and saying to myself, how can you tell people so they won't dismiss your perception? The non-dismissable part is the string hanging off to the side. The knot I saw had the position and size of a heart ganglion (a knot of nerves that run the heart) and the string hanging off to the side coincided with one of the nerves that are known to go from heart ganglia to other places in the heart. To imagine this was some alternative concoction of the evolved human mind unrelated to the known anatomy seems unlikely.

Isn't it obvious that we're talking about a direct, internal, and essentially spatial (because three dimensional) perception of the location of nerves themselves within the body. My question is, How could that happen? What must the body and nervous system be like for that experience to occur? Yes we have physics, and certainly everything on the lightning pathway, if we assume the pathway was a sequence of neurons making up different nerves recruited, by the rules of physics, by minimum resistance charge conductance, recruited to serve as the lowest-resistance pathway from finger to finger, all the neurons got lit up. I mean, their job is to conduct electrical charges, so clearly they did that.

But this is a lot deeper than merely charge conduction. This is spatial information REPRESENTATION.

I always thought a neuron pretty much tells you its inputs are above some threshold, when suddenly its outputs are sparking up. That's information TRANSFER, moving it from one end of the neuron to the other. And I always knew that there is information PROCESSING in the function that adds up the inputs to see if they hit a threshold to send a spike down to the other end. That's like a (location-free) computational neural network style multiply-add-threshold function (CNN below), and it can be used to do information processing on the (vector of) inputs, for example, to classify whether there's a pattern in the inputs, and bingo, this fires off and therefore there must have been that pattern in the inputs. So you have a pattern detector neuron, which is an information processing unit, that you could use in any number of cool ways to detect complex patterns or differences between patterns or to control complicated patterns like movements, etc., etc. I always thought that pretty much captured the idea of computational neural networks being good for AI systems and good as models of how people and animals and anything that processes information might well go about doing it. CNNs. But THIS is different.

THIS says that the firing off of a neuron carries the information of its location in three dimensional space.

So I asked a neurologist once, after I told her this story, does the firing of a neuron carry the information of its location in three dimensional space? Without pausing to think she immediately said Yes, of course it does.

So maybe so, maybe it does. Maybe, for example, there are parallel neurons in every nerve with two speeds, and when the signal passes through the fast and slow paths, the delay on arrival indicates the originating distance (and here, in my Home Depot shock moment, there was an origination everywhere along the path). Or, maybe transmitting axons or neurons conduct locally-originated signals in both directions with an echo-generating structure at the end, so that an arriving signal's originating distance can again be calculated. As physicists know, from an impulse or impact in a swimming pool, the shape and size of the swimming pool, can be reconstructed from the wave pattern that arrives at another point, because the walls all reflect and the speeds are all known, and so when a delayed impulse copy (an echo) arrives at a detector, a reflecting wall at the delay-determined distance can be inferred. So perhaps it's quite rich and detailed that if a pulse originates somewhere and its echoes travel along the neuron, and the timing and shape of it tells you when and how far and in what shape of a swimming pool, so to speak, encoded in the signal itself. Or, maybe there is some other mechanism for transmitting location in the activities of a network of biological neurons signals. I only thought of two.

I don't know. No such mechanism is known or proven to my knowledge, despite this inference from my Home Depot flash experience. Maybe the neurologist had something in mind, or on the other hand, maybe she had no idea what I was talking about and wanted me out of her office! But I personally can't reason myself away from this point; a direct experience is the strongest possible evidence for the person who experienced it, me. You, on the other hand, must judge for yourself.

What it means to me is, Every neuron does this. This didn't get evolved in a late stage of human evolution just for Tom to have a shocking experience, a certain triggered hallucination, at Home Depot. It could only have happened a long long time ago and been part of the nature of what a neuron is like from the beginning. How else? Because that would explain it; what else does? There's no selection pressure, no point, in evolving spatial perception of interior body parts that we never need to feel. No, if a random nerve inside Tom's chest does it, then why wouldn't every neuron do it, and then the neurons in a worm would do it too, and in every animal.

Close your eyes. Are you aware of the locations of the different parts of your body? Of course you feel pressure and temperature on the different parts of you that are touching different things outside of you, but do you also feel the interior of you? Muscles that might be sore? Lungs expanding and contracting? Air blowing through your nose? When you perceive these things, do you perceive not only what's going on but the different locations of everything that is going on?

Yes. That's what I'm saying.

No lens, retina, and vision faculty would seem to have evolved, as they have to see outside the body, to see inside one's intact skin. Yet (A) our system does perceive inside our skin. So (B) it has the capacity to do so. So (C) it is built in such a way that doing so is possible, is part of its design. Yet it cannot do so with CNN, the location-free artificial computational neural network model. (A CNN is comprised of nodes that without changing the computation could be anywhere or nowhere, could be an abstract vector of nodes with no location or distance information in the system, only connections, a list of links or paired endpoints, irrespective of location.) So (being gods, as we are, let's say) we need to build in spatial perception somehow, and why not at the bottom in the basic design? Then spatial perception can be useful everywhere in the evolutionary tree, as obviously it would be. To me it's pretty clear we humans, and maybe other animals, have some kind of integrated spatial awareness system.

Layering proprioception

An integrated spatial awareness system might or might not involve an extra modelling level like a little television set in the head, or a little homunculus model of yourself, on which the relative locations of things in the body are represented upstairs, so the upstairs systems can actually look at them. Well, we know that's true, the brain body map humunculus is well established. But then you'll need another homunculus model to model where things are in model, and where does it stop? Ockham's Razor would say Stop at the Start: minimize the explanatory layers.If so, maybe part of that model is the sensor system itself, and when you feel it in your legs, it's your legs that are carrying out the internal cognitive representation of the feeling in the legs. To some degree that has to be so.

Like, once in college after massage school, my old SLE friend Chuck Wilburn asked me to massage his shoulder, the left subscapular, because he couldn't work on his paper because his shoulders were tight and sore inside the shoulder blades. So next day he thanked me, he had finished the paper and could concentrate because he wasn't in pain. (Massage is palliative; everyone should get one every week during retirement: that's what you're saving for, hello!) But I wasn't happy about it, myself, because I couldn't go to sleep for two hours that night, because of a pain, guess where, in my shoulders inside the shoulder blades!

Obviously in my doing bodywork on him I was trying to understand what it was like to feel what he was feeling, and my representational systems which were used in figuring out his feeling included my own body, and evidently to understand him, I myself felt in my body what he felt in his body. In the general case this is empathy, here taken to the level of the sense of what it's like to inhabit your own body. So yes, in the body itself (or the neurons through the body) (if you are willing to believe that my pain was in my body) is the intelligence of spatial awareness and even of empathetic understanding of suffering. That would be Ockham's response to the proposal of additional layers of representation of bodily feeling. I'll call it an open question.

Another example. An Anti-Ockham redundancy occurs when joint ligaments redundantly hold the ends together, and when agonist/antagonist muscle pairs do the same thing, both being jointly and redundantly activated. Actually, stronger, finer, more perfect bodily control depends on agonist-antagonist balancing. Yet the representation of a swing of a joint this way instead of that, by itself on one side, or by both sides in a somewhat overbalanced balance, is both redundant and more effective. Ockham doesn't win every fight.

Next point. It would seem evolutionarily convenient for there to be a built-in information representation for space in the activity of neurons. Because then everything knows where it is, and control and perception and reaction all make sense of the parts of you being where they are through the fundamental infrastructure of neural representation of information. You don't exactly need some independently evolved system to take these pipes (neural signal reception endpoints) over here and declare that somehow they tell you what's going on in the left thumb, and these other ones over here tell you what's going on in the right. That gets super complicated, except that CNN, or non-locational neural network processing, has no other way to do body proprioception than that. CNN points out how the connections carry the information so current work is focussing on neurons sending out dendrites and axons far away, say, inches away, and it's an amazing mystery we hope to solve with biochemistry how they know to send out connections to the exact places they do. But now CNN advocates, who oppose the idea of a low-level capability of location proprioception, will have to come up with another equally complex system for the parts figuring out where they are, so that activities within them carry the information of their location. It might be true that a post-hoc system evolved, but in lieu of actual relevant evidence it seems like a lot of unnecessary system-building if a simple low-level design could solve it from the start.

To me this is Very Strongly Suggestive that spatial perception is a low-level neural capability, and that when you percieve stuff in space in your body, it is using this same ability (which, you wouldn't say, humans specially and uniquely evolved just so that they could perceive white strings go across the chest during an electrical discharge event, would you really say that? I didn't think so), and since surely you are conscious of spatial awareness when you are spatially aware, it is approximately the same thing as saying that Worms also have Consciousness. Every animal with a nervous system presumeably carries spatial awareness in the activity of its neurons, and that awareness which we have cannot, by Occam's Razor, be called mysteriously separate, separately evolved, separately working by separate mechanisms, just because we're humans and special and different, No, rather ours must share those same evolved capabilities. Since those capabilities in us amount to consciousness (are you not conscious of your body? is that not consciousness?), it must be also true that the same fundamental spatial consciousness is present in every living animal.

Proprioception, Control, Coordination, Learning.

Consider the engineering problem of robot spatial proprioception. For a robot to operate effectively, moving itself in space and manipulating other things in space, we might reasonably consider that the robot ought to have effective spatial representation and monitoring systems. If it can't tell what its engineered body parts are doing, how will it be able to pick up the cup, or avoid hitting itself on the door, or keep from falling down? We have electronic sensor components for light, heat, magnetism, gravity, momentum changes, etc., and a good programmer with all those devices hooked up to serial ports can digitize their readings and write them onto a dashboard. Sure. But let's propose a different design. Suppose it has a bunch of channels in it, whether of air, water, or charge, but let's assert that any signal initiated in any part of the network of channels, propagates at a finite, measureable, and usefully interpretable speed throughout the network. Thus a pop that starts in the middle of a leg will produce a pop at the inner end, as well as an echo of what bounced off the far end. From the echoes, one might infer the locations of the activities that initiated them, as well as potentially the shape or architecture of the whole network of channels.An echo chain is a spatial representation.

So maybe it would be a good robot proprioception design to have echoing transmission channels and a mapping of overlapping echo chains to spatial representations of what is going on where, inside the robot's system.

Benefits: It scales with the body. Adding channels is easy. Unnecessary and redundant are a separate encoding of space and separate class of sensors for spatial distance within the body along with their separate signals and a separate decoding and integration algorithms and hardware to implement those algorithms. Just let the signals add, and the resulting signal carries all the echoes of all the distant parts of the system. Signal sum equals information integration.

To summarize, SOMETHING must be responsible for the Home Depot flash within your humble witness' experiential being; if not a low-level neural representation of space effective across all neuron-containing animals, then what? I offer a direction, that an imaginable answer might be a (transduced to neuro-electrical signalling) sonic or perhaps echoic representation of space within the neural channels, maybe you can think of others. It's a matter of research, at this point. But my conclusion is that my sense of space is very unlikely to be qualitatively different from that of the worm, whose neurons may also echo and represent distances and activities and whose inner integrating systems may put them together in the same way mine do.

If you want to take this quite a bit farther, note that certain Buddhist doctrine asserts that divinity has the character of spaciousness: "Void". Perhaps that doctrine is identical to the claim that an aspect of consciousness is somehow fundamentally an awareness of space. We are led, here, then, to a universalist argument asserting an equal status for the (spatial) experience of more than just humans. Darwin was derided for saying Humans came from the Ape; perhaps I shall be derided for saying Human divine experience is no different from that of the Worm. But the message of both the evolution argument and the intrinsically-spatial neurocomputation argument is, Stop disrespecting those you consider beneath you, you're not so special as all that. I like that message well enough, as an advocate for the high virtue of humility. But you can stop at the puzzle, How is space represented in neuron-equipped creatures, and how might it be in robots?

The Biological Bit

START:Consider a multilayer retinal map of analog neurons each locally connected with preceding layers and globally within the layer with a perhaps Hebbian learning algorithm, such that some deeper layer, having a pattern of activation, becomes imprinted. Somewhat like holography but instead of each point carrying the whole picture, here it's that all points together carry multiple pictures. Then an activation pattern striking this layer from the feeding layer through the mutual activation and inhibition effects from the imprint operation, resolves to one of the discrete, integral number of patterns thereon. The represented information is a bunch of intensity values with maybe some parameter values for thresholding functions in a big connection matrix.

Here an analyst could consider that a single bit distinguishes two whole patterns, using that bit from outside the system to pick out one whole pattern as opposed to the other whole pattern, but there is no categorical, digital or binary representation within the array or the network, at the global level considered by the analyst, except emergently, as seen by the analyst outside the system. It’s not there. It behaves like it, but it’s not there. What's there is a massively parallel, analog computing system.

Consider another example. Sleep vs non-sleep. It takes only a single bit, considered logically, to distinguish the two. Yet a thousand intracellular clocks in different tissues and cells track each other and change the internal states of those tissues as sleep turns to non-sleep and vice versa. The clocks coordinate loosely, and some are more in charge than others, like Satchin Panda's blue light melanopsin detectors in the eyes, but in no sense does a single bit encoded singly in some abstract central logic machine actually exist in the mechanisms of the body in anything much like the way that a certain memory transistor's voltage level, being set in certain range and thereby representing +1, does in a computing machine. A bit is minimal in discrete electronics and computing machinery, but it is redundant and emergent in biology.

So no, instead, the many tissues and many many cells, and many mechanisms that differentiate sleep vs non-sleep jointly operate in such a way that a single logical bit, [+sleep] and [-sleep] can describe them rather well to an excellent first approximation. So much so that if you don't make the gross-level logical distinction, then you fail to capture the essence of the process, the shared aspects of the many mechanisms of the process, the benefits of the process, you lose the basic idea, which is enough to lose the functional, logical, and evolutionary significance of it. You attain ignorance in the trees having lost the forest.

In linguistics the study of the sound system has this Janusian, two-faced reality, the emic and etic, the logical/symbolic/discrete and the physical/variable/continuous. The phonologists break distinctions into networks of logical bits labelled with locations along the vocal tract or sound-generating methods, such as [+voiced, +labial, +stop]. They get the gross and functionally-significant structure more or less right. On the other hand are the phoneticians, who do not shut their minds to the underlying detailed reality whereby the many nerves and muscles and even passive elements like teeth and bone which control the closing of the lips are carefully choreographed in aligned-yet-shifted trajectories of both agonistic activation and inhibitory, antagonistic activation, to produce complex, indeed intricate, unbelieveably rapid sound contours which are nonetheless differentially detectable by human ears, loud enough for communication at waterfalls, beaches, and parties, and artistic or emotional for shared emotional appreciation or social significance. [+-labial] doesn't capture it all. Yet it captures an important essence, a sufficiency for reasoning about how the system works to at least a good first approximation. So we rise above, and look at both.

Watching the mutual approach and retreat algorithms of fish, birds, locusts, you lose the emergent group behavior which makes not just pretty waving pictures in the sky and or ocean but the functional logic whereby evolutionary time is survived by such tightly-clustering individuals -- because they make groups that behave as the groups do, is the reason enough of them survive as individuals to make such groups in the future. Certainly, a scientist is interested in the low level details, the mechanisms; but no less in their evolutionary significance, which is functional, and therefore expressible in logic, and capturable by symbols.

Here: What is a Symbol?

Whatever set of circumstances that a sensory detector responds to in a particular way constitutes the (response-defined) category that it detects. The response is itself like the implementation of a category; the set of all things that produce that response is that category of things. Response A to some subset of the universe of inputs, and Response B to the rest, is a system that can be described as categorizing the universe of inputs in this neural-net universe.

As long as behaviors are logically distinguishable (and survival vs not-survival is the primeval distinction here, from which all others derive), so long we may consider the behaving system as having cognitive aspects, for it must behave in those distinct ways, and thus have some internal system for making the difference as well as differently carrying them out. Distinct: Logically distinguishable, predicatively, optionally selectable.

Amoeba cognition may be limited to the biochemical systems implementing a logic that distinguishes something equivalent to “approach-and-engulf” in the context of food versus something like “flee” in the context of some toxicity gradient. All of that may be characterized by biochemical detection, signaling/cuing and flagellum-contracting cascades, but the functional logic of yummy:go, however you want to abstract it, must apply to the system or else a system that depends on movement towards food will not survive to participate in its particular evolutionary pathway any more: H. The analyst will better be able to understand the biochemical transformations if the logical information sequences are worked out. And the system as a whole once understood at both the functional logical level and the biochemical levels can truly be said to be understood. And indeed a biochemical cascade without a survival-compatible functional logic that governs it or that it implements, or, one that doesn't do something distinctively useful, would be more expected to spin out into a vortex of entropy than to be selected for evolutionarily for the preservation of the species.

In this context what is a signal, what is a cue, what is cognition?

A cue is a feature of environment (possibly conspecific) used by a receiver to influence behavior. A signal is a cue where the (generally conspecific) sender isn’t necessarily always sending the signal all the time.

Cognition: Cognitivity, when I got my BA in “Linguistics and Cognitive Science” in 1984, essentially meant an attributed psychologicality of systems modeled mathematically and by computers. So for example Chomsky feels no responsibility to clarify the neural bases of “Merge” or syntactic C-command, but declares his work that asserts these cognitive processes is psychological work nonetheless. Similarly Veatch, 1998, attributes a small logic to the event of Humor perception, but does not go into detail about the biological implementation of said logic, leaving to others the work that makes the abstract and functional logic compatible with mirror neurons and pain receptors and the fear and disgust and serotonin circuits and the low-level, actual physicality of the minds/brains that carry it out. So in practice we blunder along with our ASCII or now UTF8 lexicons and imagine some unspecified relationship between these computer files full of bits and the human mind/brain with its linguistic competence. It’s kind of BS. So let’s try to get a little deeper then.

GOTO START.

In a biological, that is, in a distributed, connectionist representation, consider two widely different gestalts or activation patterns, each containing rich and detailed form: each could be referenced from outside the system as one instead of the other using a logical, Boolean, computational bit, but the system itself may have no such binary-valued Boolean in it except as a viewer describes it from outside. Such is a biological bit. An emergent contrast. Do we need computer-style bits for categorical perception, which matches rich inputs strikingly better to one or the other rich form? No. For selection or control? Maybe. The analyst might say, Ah, although we have redundant representation and redundant control, let's instead analyse a rich system as a minimized logical structure with bits, and take all the redundancy and correlated information out of the picture. Yes, of course that can be done by an analyst, like a linguist distinguishing vowels into bit-labelled categories like [+high] and [-high]. But a reliable system is typically a redundant system, so the minimized logical structure inferred by the analyst may exist nowhere but in the analyst's analysis.

Consider perception as matching sparse inputs to stored, maximally-detailed patterns, and consider perceptual classification as identifying the best-matching stored pattern given the limited detail from current perception; then such a system can experience an "apparent perception" containing all the detail from the classification's stored percept.

Thus there may be a bit structure for things if you want to look at it that way, that is, by merely a logical reference or invocation, but what is invoked may still be a rich analog representation of the richness of analog high-dimensional reality.

Ockham's Razor, Chomsky's Minimalism, the Kolmogorov Complexity, and other tools all assert the reasonable and proper methodological claim that the minimal description is best, thus the fewer bits in your model, the less internal correlation of representational elements, the better. But when you have noisy signals and information, then even Shannon will demand redundancy to achieve robustness, and the biological requirement is not minimalism but robustness, for one bit wrong in a noisy biological information processing system might lead to a wrong turn and death. I don't say Abandon Ockham; I say look for evidence of redundancy, of robust systems, and do not automatically reject models which distribute information in correlated and repetitive layers where those models can mirror the performance of biological ones.

Light and Recognition

The Hindus characterize Consciousness as having two aspects, prakasha or light, and vimarsha or recognition.

Prakasha is the aspect of your awareness of things as being present to your subjective awareness. You see the tree and it shines forth in your awareness, now again you still continue to see the tree, there it is with all its parts spreading forth now in the yard of your perceptual universe, continuous over time, still visible, still impinging on, constituting a sort of vibrating ongoing percept in, your awareness, it again continues to show itself to you, in a way as though it were a steady source of light, shining over time at you. It has its qualia in the sense of the felt experience.

On the other hand, vimarsha, or recognition, is the aspect of your awareness of things as being of one category or another. You think of that greenery as bush or as tree based on your recognition or vimarsha of what it is. Categorization. Once known you later still know it but the categorization process coming to its first conclusion is the recognition process, whereas the maintenance of the knowledge of that categorization might be a simpler task for the representational system than the first acquisition of the categorical knowledge.

So in this Hindu view, when you are aware of a thing outside you, or even of your still inner witness within, the experience includes something as though physically present, shining at you within your perceptual world, and also some knowledge, whatever knowledge you have about it.

Thus the Self is characterized as having both the prakasha and vimarsha aspects, since it experiences a continuing awareness of its own presence, and because it has the self-evident knowledge, that knows this presence to be itself.

Or to take a more concrete example, consider a soccer ball. A proprioception of the ball is definitely a different object from the perhaps symbolically equivalent information of a center point in a coordinate system along with a radius and the equation of a sphere. There is a continuing perception of all the visible sides, perhaps the patchwork of pieces that make it up, each remaining visible and shining its shape and connectedness into your awareness, so that you perceive the whole. Thus "vimarsha" might understand it by a label, "ball", or with radius and center, or as something I can play with and kick, but "prakasha" experiences it with at least all the visible sides co-present, shining forth, fountain-like, as in each moment it presents itself again as perhaps raw data, uncooked into categories and higher knowledge but nonetheless present to your experience.

The distinction between prakasha and vimarsha is rather convenient and useful in the study of consciousness. Generally speaking psychology has been able to make progress with respect to vimarsha, the categorization and processing of information, but prakasha or subjective experience has fallen through the gap, it seems to me.

Synthetic Perception

Psychology has another gap (perhaps it's the same gap) in its explanatory domain, but this might be called synthetic perception. Let me start with some examples.

Stereoscopic merger

The first example is a most obvious case: stereoscopic merger of visual information into a 2D+ scene, not exactly full 3D perception because you can't see the back side of things and your depth distance estimates are probably not as good as your up-down and right-left distances. Object coherence is inferred, perhaps most easily by stereoscopic vision combined with movement, such that the trees in the background pass you by more slowly than the trees in the foreground, making it perceptible that a foreground tree is spatially unified with itself, and spatially separated from its background.

Color

The second example of synthetic perception is the experiential perception of color, itself, one might call it color proprioception. I call it proprioception because the nature of it is so obviously concocted, so distantly related to either the underlying phenomena, measures, measurement apparatus. An electromagnetic spectrum within some frequency range is some intensity-valued function across the domain of frequencies. Lens-mapped onto the retina, each distinguishable pixel in the visual field picks up its own spectrum, and in this way a spectrum may map to, but is distinct from, an experience of color.

Indeed according to a theory Einstein worked on for much of his life, the physics of electromagnetism seems well described by having an extra dimension, beyond gravitational space-time, a "5th dimension" (cf. wikipedia.org/wiki/Kaluza-Klein_theory: "electric charge is identified with motion in the fifth dimension"). Remember that Einstein's life purpose was a theory of everything including both the irreconcilable electromagnetism and gravity theories. You can read that Kaluza showed that Maxwell's exact equations of electromagnetism fall out of Einstein's relativity equations when those are solved with this fifth dimension. It's called the "Kaluza miracle"; he ran around the house yelling "Victory!"

So this theory was good enough for Einstein through the 1920s and 1930s, and it has been resurrected and elaborated in string theory lately, so one could consider it as either true or at least good enough for now. It's fun, though not actually essential to my argument that everything, including the human perceptual apparatus, physically lives within 5 dimensions, 3 of space, plus time, plus let's call it charge. The human perceptual apparatus maps each of these differently. If you don't want to call the last one Charge, you can handle light how you like (again with Maxwell's equations).

Chomsky made this point in a lecture at Swarthmore in the 1980's, and perhaps in print I know not where. He said, as I recall, Humans are designed so as to be able to access certain kinds of information, and not others, so we make syntactic calculations in planning how to produce sentences, and we can think about the meaning of what we're saying, but we can't perceive the syntactic calculations themselves. Here there are dimensions our system picks out to be able to perceive in certain ways, and others that we can't.

Evidently, the space dimensions are handled by something like an enhanced 2D view, where most information is in left-right and up-down, while some, but much less, is represented in a depth dimension, as may be convenient to a traveling organism, and all relative to the subjective coordinates natural to the organism.

The system builds its representation of time peculiarly, suffice it to say that the construction of interior representations of time (as prakasha, its felt durativity; as vimarsha, its subjectively assessed or measured quantity) are quite a different matter from the physical unfolding of time itself (as hinted in Bliss Theory), which goes physically, at a constant rate, unlike subjective perceptions of time.

And the fifth dimension, I'll say our systems generally ignore it, following Chomsky's point that we may not be evolved to know some classes of knowledge: except as encoded within the visual color system. Maybe the 5th dimension is there, but we aren't built to see it, except in a certain special way that doesn't even look like a spatial dimension at all. Just like a dog and what, a lichen, can't see color, we are born blind, too.

So the rods and cones detect light, the rods with a broad sensitivity to light frequency within the detectable range, and the three (or four for a few special "tetrachromat" humans) types of cones with somewhat narrower, but differently centered, spectral sensitivity ranges. That information is combined in the central nervous system to the unitary subjective experiences of color in a universe of categories or spaces presently unknown, the qualia of color. But clearly the combination of the underlying information of the activation levels of the different cones provides for a synthetic perception whereby all the information in the various separate sources is combined into a single percept of, say, aqua, brown, purple, or cream. What is the nature of this synthetic perception? I don't mean just, what information sources are detected by the sensory neurons and what calculations are made on them to generate the perceptual sensitivities we have. I'm asking what are the internal representations or activation patterns and structures such that we have the experiences of color that we subjectively, undeniably, do. That mapping is a synthesis of information sources, a synthetic perception. And it is a legitimate target of scientific inquiry.

Rhythm

Rhythm perception (and thus musical perception): immediately on the second measure the details begin to be lost and some emergent integrated percept arises (prakasha only, vimarsha seems lacking to the layperson, though once there is a mapping to action, that is, for musicians, the percept can then also include the action-control structures compatible with the auditory scene thus vimarsha). This integrated percept certainly contains the global rhythm, the timing of the repetitive pattern, since everyone can tap or swing to the beat. It also contains the perceptually non-habituated or only-partially-habituated parts thereof. I don't presume to know the rules of this transformation but transformation there certainly is, as when you pop onto a new radio station and hear the music as a sort of static for perhaps a measure whereafter suddenly the noise resolves into a single and familiar musical impression. (In the west we let the melody dominate that perception and the rest is texture, perhaps, while in at least classical Indian music melody is hardly noticed at all while the sympathetic movements of the inner emotional system are almost exclusively attended to. Thus I personally dislike Western music with few exceptions as an endless worship of emotionally meaningless repetitiousness, a sort of highly active drooling doodling that brings no feeling at all while evidently its fans are fascinated, mentally quite fascinatedly obsessed like an idiot savant with the intricacies of minor variations on the meaningless. Yuck. But that's just me.)

But evidently rhythm perception is a synthetic perception, taking the various bits of the repeating structure, and the repeating structure itself, and its doodling noodling change over time, and finding some joint perception of its unified quality as a particular riff or perhaps as a whole piece of music. Although many it is also one.

Musical Emotion: The basic rule of social emotion is that people synchronize to the emotional states of others. Thus an opera singer straining at her notes seems to me not a heroic achiever of a high C in a compelling melody, but rather a stressed tuning fork, an over-tightened pretender, who brings me also (perhaps more than others) the feeling of being over-tightened and pretentious. Whereas, an emotionally present human being comfortable in their range singing for the joy of the note itself, e.g. Amira Willighagen, usually, makes me comfortable in my own breathing, and joyful in the perception of the note itself. This quite general phenomenon of emotional synchronization has many ramifications: e.g., don't proselytise rigidly or your audience will respond rigidly; etc.

Texture perception is another example of synthetic perception. Textures have emergent properties which integrate the lower-level information. In touch, when feeling a texture with the hand, perhaps it's not so much an average of the highs and the lows as a degree of resistance to action which may be a function of direction, number and sharpness of edges.

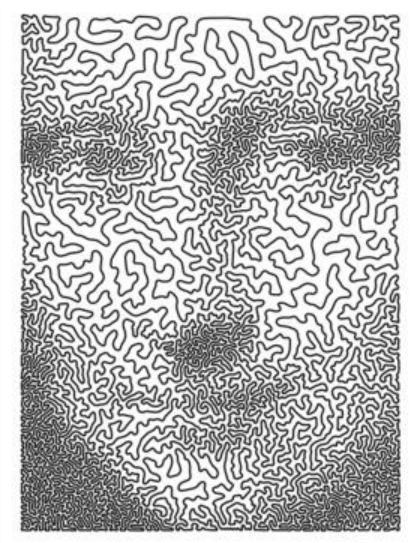

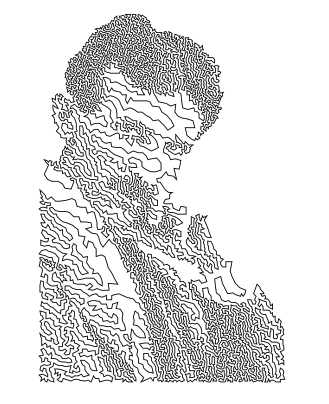

In visual textures, synthetic perception is different from averaged perception of multi-colored pixelated forms, or photomosaics. When the variation in the informational substrate is on a scale smaller than the perceiveable fineness (or as we say in phonetics, the difference limen), perception can be understood as mere averaging. A visual processing system that takes the entire mosaic element sub-picture and averages its qualities of color or brightness before even pushing its information upstairs to be combined into the larger picture, essentially replacing the whole sub-element with its average, would seem to do as well as a finite-precision system could possibly do, or close to it. One might think of the variegated specklings and colors of a robin's egg basically a light blue, because the many color elements as might be seen on close inspection have blended into a sort of global, or really, locally-integrated, percept. This is not the same as synthetic perception, by which I refer to the perception of wholes where the parts remain individually present in the perception. For example, synthetic perception of visual textures may be what you experience with a variety of granite countertops, for example, viewed close enough to distinguish crystals in the stone, and yet showing some higher rich pattern with both the detail and the higher pattern; that is what I'd call a synthetic perception.

Object Coherence

There are plenty more examples, but object coherence is a big one.Have a gander at this one and think about what you see, how you can possibly see what you are seeing when you look closer, and also from farther away.

Continuity of surface and interior, continuity in time, are low-level inferences from the raw, you might say, spark-like, sensory data.

Beside stereoscopic coherence, the field of image processing has shown how grades of brightness can be used to infer coherent surface curvature w.r.t. the light source, in the absence of other factors. Similarity of color, shape, contour, can similarly be used to indicate object coherence.

Adjacency in visual space or time combined with similarity perceived or inferred helps with the perception of continuity. Object perception (as opposed to classification or symbolic calculations based on perceived objects) seems to have these continuities in them. It's like a fountain of processing, at the bottom are sensations, in the middle are the processing levels, and the shapes at the top are the categories found in the data and the reasoning, planning, mapping-to-actions developed there. As time unfolds this fountain of consciousness, input data repeatedly comes up into the bottom of it while the different higher levels are all processing their level of results too. Novelty vs sameness is the most basic distinction, centrally. The perception of sameness or continuity has to do with the continued input feed supporting the (same-enough) symbolic and classification structures which (merely) integrate them into object coherency; whereas novelty occurs where support breaks down and perceptual structure-building must construct anew. These functions (new vs same) are perceptually separable and might engage quite different implementation subsystems.

I think the solution, being the structure and development of a system with these qualities, which may be an artificial intelligence of the future, has three parts:

-

1) Using reinforcement learning we develop generalizeable models of

"stuff" based on solid-squishables, more-or-less disintegratables, and

liquids, along with deep, rich experience of them particularly in the context

of action, prototypically mouth and hand work even in the womb, perhaps not

limited to swallowing and thumb-sucking.

- 2) Then we develop detailed object type models based on deep rich

experience of one or more taken-as-prototypical instances.

- 3) Then we richly perceive the vaguely perceived by fitting adjustable

prototypes to the weakly perceived, informationally poor inputs,

carrying over the details from the prototype representation into the

percept itself.

Stereoscopic movement as object learning

Look at a forest. See the trees as you walk on the path. The near ones fall behind you sooner, the far ones more slowly. One passes before the other. What do you learn? Change of group relationship under a stereoscopic view plus movement is the basis for object learning. If this passes in front of that, then it is not just closer, it is SEPARATE. There is stereoscopically inferred distance between the two, the front one becomes a foregrounded object, with characteristics inferrably similar to those seen on the side unseen. Thus we may infer that the tree trunk has an unseen back side more or less like the seen front side. It becomes an object separate from others in the scene, and it now exists in the round, though seen only from 180+epsilon degrees as you walk by.

Metrics to guide reinforcement learning

Let's keep our focus on motivated action and learning.The tongue, lips, and jaw control systems that learn to squeeze liquid in and out and within the mouth within the womb. What is the metric of value applied in this reinforcement learning situation? What is the instinct which drives learning?

(1) Will to Power: A ratio between magnitude of effort and magnitude of effect.

Little effort, big effect? Awesome. The network likes that, reinforces it, learns.

(2) Will to Integrate: A ratio between coordination complexity and effect complexity.

Here I am seeking a measurement of the degree of success in integrating into a simpler center of control or understanding richer inputs and richer -- and as intended -- outputs. At each level simpler initiation of coordinated control manages higher complexity of process and intentional outcome.) (Not yet the 3 Laws of Robotics; we also leave aside temporarily social motivation whether compassionate or competitive; and the hierarchies of Maslow, the chakras, and other evolutionary imperatives.)Greater coordination in action leading to greater coordination in perception. Out of the static looms form. Spatial awareness is the first organization. Even in the womb, rubbing against oneself, tongue against palate, say, or thumb against palate or tongue, establishes immediate action-tied and spatial percepts of pressures at locations, sliding planes, perceived on both sides. These in association with muscle actuation patterns and proprioceived actuation levels, learning can occur, to discover basics about physical reality: solidity, coherence under motion, conservation of matter for liquid.

We have merely touched on aspects of synthetic perception through a few examples, and have seen hints about a pathway of learnability, generalization, and use in action and perception. My take on this? To me this approach seems novel and compelling. What's your reaction?

Robot Emotion

Robot designers might apply, so that robots might learn from, these lessons about motivational framing, derived from the logical requirements of Darwin, which also apply to us humans.Evolved systems and organisms are subject to systematic motivational constraints, to marshall and focus efforts toward a hierarchy of priorities, which if ignored or misprioritized may prevent survival and/or reproduction. For example learning, as a prioritized motivational frame, is a biological imperative for a certain kind of species. Can these priorities be expressed as reinforcement learning metrics similar to the Will to Power and the Will to Integrate above? That's homework, for the robot designer, as important as, perhaps a way to implement, the Three Laws of Robotics.

The priorities must include (as applicable to the particular kind of species) at least these:

- Survival generally before reproduction, since you can't reproduce if you didn't survive to reproduce. Reproduction opportunities are multiple: if one is lost, all is not lost. Whereas, survival opportunities have an "absorbing barrier", if one loses on just one occasion, the whole game is entirely over. Therefore survival precedes reproduction as a motivational frame. DSL.

- Physical before emotional safety and security, generally.

- (in a social species) Social position after security.

- (in a learning species) Learning, which could offer functional benefits, slightly before free artistic expression which may serve only itself.

- Emotional liberation, everyone's last priority. Not sure why that's logically necessary, except that it is the low-energy state in a system with lots of adrenalin and high-energy disruptors going on; one might reasonably aim to be free of all that, if possible, or at least to use them rather than to be used by them.

- For a social-support-dependent species: maintaining the support, thus the voluntary acceptance, even the appreciation, of other members of the species community: empathetic capacity, emotional intelligence, and diplomatic skill.

- For a long-childhood species: maintaining the voluntary interest, the attraction of support-givers to the child. Strong pair bonding, parental adoration of and interest in the child, compassion for suffering and attraction toward helplessness; and for the child, being and remaining adorable until the age of independence. Etc. (The balance between child-side adorability and parent-side adoration had better lean to the adoration side, even if the adorability is dropping off at a certain age, to support a safer transition into independence, in such a species.)

- For a species that obtains its unique survival advantages by learning: learning.

- For a species that must acquire more movement coordination than is inherited in that species: learning of coordination.

- For a team ballistic hunting species: learning of muscular movement coordination, target identification and tracking, ballistic weapon control, teamwork.

Prioritization Systems

The following table organizes four-plus ostensibly independent and unrelated systems for prioritization of human motivation. These different systems are shockingly consistent with each other. Perhaps we should call this, Prioritization Logic. Think clearly, first, and maybe then we can.

| Logical | Maslow | Later Maslow | Hormone | Chakra |

| Survival | Physiological | Physiological | Adrenalin | Muladhara: Root or Survival Chakra |

Air, Painlessness, Rest, Sleep, Water, Food, Warmth, Shelter (in that order). |

||||

| Reproduction | N/A | N/A | Testosterone | Svadhisthana: the chakra of sexuality and play energy |

| Sex is physiological to Maslow but separated by the other systems. | ||||

| Increased Safety | Safety, Security | Safety, Security | Oxytocin | Manipura: at the solar plexus, belly energy, gut experience, willpower |

| Oxytocin = Trust, Trust = assessed safety. | ||||

| Emotional security & warmth | Love/belonging | Love/belonging | Oxytocin & Serotonin | Anahata: Heart |

| Respect by self and by others | Esteem | Esteem | Serotonin, which tracks hierarchical position | Vishuddha: Throat Chakra, energy of self-assertiveness, hearing/speaking |

| Learning | N/A | Cognitive | Dopamine | Ajna, the "third eye" |

| N/A | Aesthetic | Dopamine | Ajna | |

| Self-actualization | Self-actualization | Dopamine | Ajna | |

| Emotional liberation | N/A | Transcendence | ?? | Sahasrara: Crown of head, doorway to the divine |

With motivation found to be at the center of concept of self, and with Darwin imposing strong constraints on resource management prioritization in successful organisms, by the mere and bitter logic of evolutionary survival, it is not surprising if different thinkers in different millenia, as well as the scientists of hormonal motivation systems, each andd all come up with a motivational prioritization schema roughly compatible with each of the others. It seems they are saying the same thing, something that needs to be said, something that organisms and humans need to get right, and that thinkers, if they are not blowing smoke, ought to be pretty consistent with. As seen above.

Now, a robot can recalculate motivational urgencies in the latest updated context and rank its priorities using simple discrete logic comparing a few continuous variables. That does not imply humans do exactly so, although I am implying humans do as if they do so. An experiential, or "prakasha", layer seems additionally present, coinciding with the emotional ebbs, flows, adjustments made, which the robot need not be given by its creator: palpable excitement or relief as they impinge on our attention, the proprioception of adrenalin-enhanced blood flows, might not be implemented and present in a still-usefully-functioning robot. But perhaps that layer was needed in evolutionary history and retained as at-least-not-useless, since they do help to track the state of the organism and provide data for emotion-supported planning purposes. They might be part of the control loop whereby emotional response controls behavior: P E A I B X could function primarily a smart hormone mixer and pump, and the planned behaviors driven indirectly upon receipt of data from, I mean to say, upon experiencing, the by-then-existing hormonal state of the actuator system. Then the planning system can do what makes sense to it within the range of what's compatible with one's physiological/hormonal state. In short, we don't know the interior information flow pathway from motivational-frame-prioritization to action-planning; even this indirect route is possible.

Next, a topic in vimarsha, language itself. We will move toward language after some steps on cognition itself, logical methods, and the role of (functional) logic in evolution and in psychological characterization of organismal cognition.

Cognition as Simplification

Can we enumerate and order the elements of human language in an evolutionary and functional sequence? I think so.

Simplification and its subtype (Useful) Abstraction play a key role in what I will call cognition, which is certainly deeper evolutionarily and conceptually than language. (I call the information-processing cascades implemented by living or non-living machinery by the name "cognition".) The basic and unavoidable tasks of an organism are survival and reproduction. Given the complexity of a dangerous world and the limitations of perception and action, the organism, being required to respond with discretely-characterizeable choices such as Approach or Flee, the organism must Simplify. It is required, if survival and reproduction are to reliably occur, for the organism to reliably convert the impingement of infinitely variable circumstance into the limitation of choice. This is a job of simplification, obviously, since many (circumstances) are reduced to few (choices), but to be effective it must be a job of useful abstraction whereby richly variable circumstances are assessed by the organism essentially as instances of a category -- the category that the action taken represents.

Perhaps, for example, a toxin gradient is detected, and the paramecium orients downward on that gradient and flees at a pace in some proportion to the toxin intensity. It (can be analysed as having) made a discrete decision, and acted accordingly. At a lower but still high priority a food or nutrient gradient may also be detected, and approach triggered.

These are discrete, categorical, behavioral outcomes -- even if they may also be scaled quantitatively and roughly continuously by some intensity level of the input and of the behavioral response. A (perhaps fuzzy) logic has applied, and the discrete categories triggered. The world has been abstracted from its infinite and not-even-perceiveable complexity into two, what we may call, categories, but these are both perceptual categories defined as internal processes that categorize the organism's world, as well as behavioral categories, which are the choices that the organism must make, so that it responds in a way that allows it to survive and to reproduce. There may be other layers as well.

The physical implementation of perception, decision-making, and action in single-cell organisms are, no doubt, chemical cascades that transduce the environment into internal chemical signals which then internally transduce into anatomical adjustments: movements, behavior. Fine. If it keeps the organism alive and reproducing, then Darwin applies: survival and selection. And said organism lives and reproduces, and its system perpetuates. Considered from the logical or cognitive perspective, the highest-priority discrete outcome, with its two alternatives being survival and death, are the ultimate abstraction, classifying the world into surviveable-for-me and not.

There is a logical structure to life itself, we may conclude. Life is constantly filtered through the match between its capabilities and its conditions. Match? survival, selection. Mismatch: not.

This logic is fatal. Only through the window of this logic comes survival, reproduction, and evolution itself. And yes, later, language.

Cognitive science as an epistemological layer of biological science.

I was trained as a linguist and cognitive scientist, to use the integrated tools of information theory (bits), computer architecture (memory, CPU, data communications, sensors and actuators), and foundational logic itself from Boolean truth tables defining logical combinations of atomic propositions to Frege's predicate calculus and various quantifiers, and up through the hierarchy of mathematical logics and category theories used to model human language semantics, in the form of the meaning of sentences.

These tools do not actually assert one way or another what the mechanism or internal processes must be for an organism like a language-producing human to generate and express thought-embodying sentences. We could be crows or lizards as far as a formal linguist is concerned, so long as the thing/person/process/robot/whatever is capable of functionally generating and using the complex behaviors we analyse. Our job is not to specify what sensors sense or how muscle activation is coordinated or what is the inner subjective experience, if any, of a language producing device. The cognitive scientist's job is to be clear about the logic that the device must be following when it systematically uses this form or behaves in that way, produces some particular bit of language. For example, "No" is an utterance of English, and its existence implies, by means of logic, quite a lot of things about what kind of thing/person/etc. could produce it.

There have to be, let's call them, people; they have to have motivated or informational frames and a theory of mind; there has to be the possibility that these frames differ from one person to another; there has to be the possibility of expression in words by one person that they have detected such a difference; and one has to have done so. This is a logically detailed set of requirements, but physiologically, chemically, neurologically, subjectively, physically, electrically, anatomically, it is very underspecified, and we depend on the biologists, the biochemists, the physiologists, the folks with electrodes, microscopes, and centrifuges, to tell us how organisms like people do it in full detail. But characterizing what they do and what that implies, that's our job, the cognitive scientists.

In a way we are psychologists, but psychologists seem to be stuck in the weakly-characterizeable, gray, fuzzy world of undergraduate statistics-for-non-math-majors, and they have the hardest time asserting anything to be a fact at all, whereas a linguist can generate compelling lists of facts at will: just give them a native speaker informant of any language and ask for a list of minimal pairs (words different in pronunciation by only one sound), and they will busily start building the logical structure of the phonological system, and soon have a lexicon and a grammar and a phonetic chart and a bunch of tools for learners that let random visitors to that country interested in that language get up to speed ASAP. These achievements aren't made (usually) by spending a lot of time wondering and running statistical tests whether P is really the same as or different from B. Your informant says so; the facts of a language are generally (not always, but quite generally) found to be validly intersubjectively shared across all its native speakers; the informant's assertion is most likely the reflection of, say, 10 million native speakers each pronouncing many thousands of words with that same particular sound in that same particular way. Well, there is a rare edge case like Philadelphia Short A, but Very Rare, and we need to get a lexicon and a grammar out so let's move along a little bit and make some broad generalizations.

When in 1993 Psych Review rejected "A Theory of Humor" with the comment that it was "not psychological enough", it clarified that the field of research psychology suffers from being like Sheikh Nasruddin, who searched under the street lamp for the keys that he had lost elsewhere, why, because that's where he knows how to search. They could hardly mean that the subject of humor perception is not psychological; but it makes sense if they refuse to lift their eyes from their methodology. Then the significance of the very field is called into question, when you are unable to conceive certain thoughts, look in certain baskets, ask bigger questions, derive bigger generalizations, take into account a wider variety of facts. If you limit your purview to a correlation coefficient or an anova table outside any context of logical dependency and knowledge, you will have a hard time understanding the rich structure of problems and solutions that govern and constitute biological systems.

Look, I'm not bitter, and you are not alone in your self-imposed limitations. The field of linguistics has an exactly similar blind spot. A theoretical linguist can be defined as a person who is unable to consider a thought that is not based on the structuralist method. Yet facts of language exist which are not the typical structuralist observations, like quantitative sociolinguistic variation, or the socially-shared, fine control of vowel coloring found in Veatch 1991. Similarly, evolutionary biologists that I know basically refuse to think about or discuss human evolution, because you can't exactly run ethical experiments on humans. None of this means that the questions don't exist, nor that answers can't be found or justified.

In short, a cognitive scientist, as opposed to a psychologist, is comfortable with a more inclusive, multi-perspective, wide-eyed, frankly logical approach that allows the building of facts upon other facts, and developing hierarchies of structure in their search for deeper generalizations, richer insights about the nature of things, organisms, animals, people. So indeed, perhaps I do have a different and more leading-edge take on the thoughts of the day related to human psychology.

All this is by way of an argument that the task of characterizing the logic of the process or system is my task, and the way I do it is with logic. Luckily, everyone gets out of the way, when you use logic on them.

Evolutionary/Functional/Logical decomposition of the elements of Language

Ah, simplification.I know that my life over time without interaction seems to turn into a nest of threads.

It is social interaction that brings mental simplification. Expressing it simplifies it, makes sense of the goal structure, what belongs where, what can be ignored, what's within the generalization.

So language is not just abstract, not just meaningful, but helpfully simplifying.

A speaker's task in language production is to come up with something sayable and useful, that is, (1) simple enough as a word, phrase, sentence or set of sentences that it can be expressed given the tools of language, while also (2) capturing, in some sense, some essence of the circumstances the speaker desires to describe and communicate, for another to share in the expressed essence or meaning, and also (3) uptakeable (where functional).

Thus economy wins, poetic capture of some situational slant wins, expressive effort loses. Elaborated understanding and point by point enumeration of propositions representing all or even many or even more than very few of the details of even only the most relevant circumstances: loses. Yet understanding is limited and blocked by the resulting information shortage; how can a listener put things together, unless carrying out their own goal directed quest, fitting what they get from this input like an arbitrary puzzle piece into their own motivated frame and active landscape.

(On a related note, X Y Z.)AI needs to learn sharing therefore share-ability is a criterion. Sharing relates to cooperation. Humans cooperate by sharing goals also. We manage our emotions together. We laugh together, sharing moral interpretations. We learn what is important, we learn moral opinion together. In these ways also, learning underlying structure is as important as learning skilled action.

The logical problem of word creation can be understood in an assumeable context of subjective organismal being and activity. That is, you might say archetypally, one is an organism, one is alive, one has internal spatial awareness and a capacity for sound, smell, taste, temperature, touch, sexual stimulatory sensory perceptions; one has parents (assuming the species reproduces sexually) and lives within one or more dominance hierarchies enabling mutual survival as a populous and gregarious species; one lives by movement including coordinated actuator activation-pulse trains (hierarchies and sequences and relative timings and magnitudes) within a perceptual space constructing an internal vision of local 3D reality integrating sensory modalities from sight, touch, sound, and also proprioceptive "direct" spatial perception a la worm consciousness.

In human organisms the capacity for multi-layered cognition is elaborated. Layering as in the linguistic sign whereby a word comprises both a sound sequence layer and a meaning layer. Layering as in the perceptual layering of the visual system, processing up in layers starting from rod and cone dots into oriented micro-segments into higher and higher level patterns and ultimately into full object percepts. Enough layers enables representation of changing situations across time, so that time itself is subjectively constructed, so that the distinction of possible from actual is rendered internally practicable; and with the distinction necessarily negation itself; so transactions become conceiveable; also, so that richer models may arise of self-from-actions, self-vs-others, the interpersonal or social significance of action, and valuation itself which is the comparison and value-assessment of not just factual but counterfactual, to include possible, desireable, feared, remembered, circumstances.

Matter whether solid, liquid or gaseous in state, must enter and leave the body via state-specific orifices, so that digestion, respiration, etc. may occur and metabolism continue at least for a time. Life goes on, and in this context coordinated action (not excluding communication) occurs following a system-state-dependent valuation hierarchy a la Maslow or the chakra system, since of course priorities for an organism may be ranked, death, suffocation, overheat, overcold, sleeplessness, thirst, hunger, non-reproduction, dishonor, rejection by one's community, ignorance, emotional suffering, inability to speak one's truth, ignorance of one's true nature as divine consciousness. This is hardly controversial.

In any particular circumstance, a speaker can remember relevant linguistic elements present therein, and join them in combined forms enabled by language's simple essential structures which nonetheless capture some useful essence communicably with others. The invention of a name for a thing seems to go along with the invention or discovery of the thing itself, except that it might need a few instances sharing some similar quality or use for it to acquire its generality, abstractness, and communicability. But movement/action, planning, transacting, etc., the basic stuff of the species-universal context of being a human organism, will of course always be present, and certainly do motivate plenty of linguistic categories which therefore in a multi-layered cognition system come into shared use. Some categories are basic, arise from the nature of the species, and are thus useful in shared understanding: do/move, go, return, drink, eat, i/o, sleep, fuck, touch, smell, burn, freeze. Also because we plan and target the counterfactual using values: like, dislike; negation. And because as a planning and a social species we cooperate around plans: direct, obey, accept, reject. Conveniently capturing things around goals we get: path, target, hit, miss, start, stop, tool, obstacle. How do such and other categories arise, find utility, become shared?

The construction of language categories like the above, or even more abstractly verb, noun, and modifier seems certainly convenient for a species that acts, manipulates objects, and mentally recognizes qualities. But the universality of action, thing, and quality seems genetically determined, since in the natural flow of physics populated by organisms, the boundaries of things and their mutual similarities captured by any conceptual or labelling tree of categorical divisions thereof, seem like I say convenient but not necessary. Indeed as Peterson points out science and valuation are different as a map of the furniture and free paths in the room is different from the action-driving desire of thirst and intention to go through the room to get some milk from the fridge. Humans indeed always operate in a motivated frame, and the target of one's current motivation certainly goes beyond the driving of planning and action to actually structuring perception which ignores, doesn't even see, circumstances not relevant as aid, enablement, obstacle, path or tool or goal itself. Thus read a book with a question in mind; write a program to learn a programming language; live following a goal to experience motivation, progress, and energy.

Categories arise within the process of useful, that is to say, time-bound, goal-directed activity. Whatever may help action toward goals may become useful as a mental or, if shareable, communicable, then as a linguistic, category. Stuff acquires words given perceptual and productive capacities: when sound itself ramifies into high and low, burst and continuant, all vibrational in their nature, a playground of possibility for associating sounds, and when sequence is part of cognition, sequences of sounds, to shareabilities of utility. In the moment of shareability is utterance and uptake. In the discovery of utility is abstraction since repetition of an essence in always-variable circumstances picks out something shareably understandable and constant, that is, to say removed from the variability, suitably abstract.

For this reason the spiritual being of interiorly experienced consciousness before construction of time, lacking inner constructs of goal, desire, and utility, success and failure, may nonetheless include the simple (direct, uninterpreted) perception of vibration and light, sound and sensory flow. If captured by desire, so to speak, then the net of categories makes sense; yet bliss flows when simply sound itself vibrates within, and the emotional being within is unbound. Wisdom consists in the not being so captured, the ability to drop the unnecessary structure system when it's time for being, for emotional unconditionality, liberated joy. Committed experience of vibration itself, i.e. active wholehearted chanting, brings awareness to a state free of the net of categories and the emotional enslavement of desire-entrapped hunting whether successful or not.

Yet the hunt is itself a lie, emotionally, that is to say in the most important way. You subserve the goal, you entrap your feelings into the progress of the hunt, and at best if you pick a meaningful goal then once achieved you may momentarily let yourself transact with yourself to peek throgh the curtain of emotionally-bound being to that greater being which is free, unbound, emotions unlimited by stories and honor or dishonor, emotions which can go as high as the capacity of the heart touch you come through, the vastness of the heart is revealed, the edges of the infinite are surpassed, freedom, freedom, the abode of satisfaction, the fire hose of whatever you need, the peace of timeless being, the surrendered inner cleanliness. Perhaps hunt part-time if that is your karma but fulltime it is noone's karma to hunt and hunt always. On the contrary it is a play where you are the actor and the stage and it your choice to play in your role. Also your choice to be free, you can do it. Who said stop overflowing with joy and energy? Your babysitter can take some time off. Why isn't every sound the belling of joy for you? You have permission to let it be. Let it be!

You can even pay attention to the vibration within the sounds of the category labels themselves, at the same time the labels are sounding in your own mouth or another's, and even at work hunting you can have some awareness sharing the point while still seeing and being in the unconditioned emotional ground, which is after all always present as the ground and substance of perception.

We are beings of continuous perception, ever fresh; the flow of what comes now is always here with us.

When the longed-for tender caress finally touches your cheek, do you think and think and think of the status achieved and the binding of the relationship, or do you experience the continuous perception of the touch, the stroke, the warmth, the arising and lengthening and subsiding in love of this note of the conscious awareness of this, now? The more precious the more unintervened-upon by calculations of progress. Feel fully. Quit looking at the stands while you're still running the race.

Working Memory, Layering, Math

Let's say for convenience that working memory can hold some specific number of elements at once (say 7, but actually that would be a matter for research to determine), but also let's say it has a layering structure, such that you can do Chomskyan Merge on a complex subset of elements held in working memory. A Merge operation substitutes a higher category for the subset. Then as a consequence you can pop up a layer, substituting out up to 7 elements, replacing them with a single one, the Merge of the complex subset, which is essentially a label for that subset, then your effective working memory expands to have room in it for 6 more elements. This process being recursive, quite complex thoughts can be built up (and expressed in language) despite the limitations of working memory. The task of expressing complex sentences becomes the unpacking of stacks of merged elements. This is not a bad way of thinking about linguistic cognition.

Math is based on, or I would say, read off, geometric or other intuitions that can be developed by whatever means by the mathematician. But as shared with others, math is a language, made for convenience, constructable by the mathematician to refer to and express their intuitions.

If the Sentences are equations of two expressions, each recursively the sum, difference, product, or quotient of two expressions, we get a grammar like this:

| S | => | Expr '=' Expr |

| Expr | => | Expr { '+', '-', '*', "/" } Expr |

A few rules elaborate the above to provide for decimal numbers, variables, powers, logs, integral or summation signs, etc. within expressions.

Part of a mathematician's job is to make up more convenient notations, which so far as I know can be captured in rules like the above.

H Notation

Here's a notation I find very convenient; I refer to it as H notation.